As much as it pains me to mention politics, when it bleeds over into the internet and dirtying my data, I’ve gotta type out some words. This is a 2016 article about blocking Trump spam in Universal Google Analytics (sigh, pre-GA4) oh these were the innocent days of SEO.

In 8 years the difference between election malpractices are startling. I’m linking to a couple of Guardian articles dated this week (late February 2024) because I’m very annoyed to read the bad things people are doing with Ai. I’m really, really pissed off that the world is like this.

Robocalls of President Biden already confused primary voters in New Hampshire – but measures to curb the technology could be too little too late. Listen to the deepfakes here and see whether you can spot the deepfake.

Last modified on Mon 26 Feb 2024 22.31 GMT by Rachel Leingang

The 2025 American AI election is here – Now With Deepfake Robocalls

Already this year, a robocall generated using artificial intelligence targeted New Hampshire voters in the January primary, purporting to be President Joe Biden and telling them to stay home in what officials said could be the first attempt at using AI to interfere with a US election.

The “deepfake” calls were linked to two Texas companies, Life Corporation and Lingo Telecom.

A new tool targets voter fraud in Georgia – but is it skirting the law?

It’s not clear if the deepfake calls actually prevented voters from turning out, but that doesn’t really matter, said Lisa Gilbert, executive vice-president of Public Citizen, a group that’s been pushing for federal and state regulation of AI’s use in politics.

“I don’t think we need to wait to see how many people got deceived to understand that that was the point,” Gilbert said.

Examples of what could be ahead for the US are happening all over the world. In Slovakia, Indonesia and India, even to the point of AI versions of dead politicians have been brought back to compliment elected officials, according to Al Jazeera.

But US regulations aren’t ready for the boom in fast-paced AI technology and how it could influence voters. Soon after the fake call in New Hampshire, the Federal Communications Commission announced a ban on robocalls that use AI audio.

The FEC has yet to put rules in place to govern the use of AI in political ads, though states are moving quickly to fill the gap in regulation.

At the national level, or with major public figures, debunking a deepfake happens fairly quickly, with outside groups and journalists jumping in to spot a spoof and spread the word that it’s not real. When the scale is smaller, though, there are fewer people working to debunk something that could be AI-generated. Narratives begin to set in. In Baltimore, for example, recordings posted in January of a local principal allegedly making offensive comments could be AI-generated – it’s still under investigation.

In the absence of regulations from the Federal Election Commission (FEC), a handful of states have instituted laws over the use of AI in political ads, and dozens more states have filed bills on the subject. At the state level, regulating AI in elections is a bipartisan issue, Gilbert said. The bills often call for clear disclosures or disclaimers in political ads that make sure voters understand content was AI-generated; without such disclosure, the use of AI is then banned in many of the bills, she said.

The FEC opened a rule-making process for AI last summer, and the agency said it expects to resolve it sometime this summer, the Washington Post has reported. Until then, political ads with AI may have some state regulations to follow, but otherwise aren’t restricted by any AI-specific FEC rules.

“Hopefully we will be able to get something in place in time, so it’s not kind of a wild west,” Gilbert said. “But it’s closing in on that point, and we need to move really fast.”

The US House launched a bipartisan taskforce on 20 February that will research ways AI could be regulated and issue a report with recommendations. But with partisan gridlock ruling Congress, and US regulation trailing the pace of AI’s rapid advance, it’s unclear what, if anything, could be in place in time for this year’s elections.

Without clear safeguards, the impact of AI on the election might come down to what voters can discern as real and not real. AI – in the form of text, bots, audio, photo or video – can be used to make it look like candidates are saying or doing things they didn’t do, either to damage their reputations or mislead voters. It can be used to beef up disinformation campaigns, making imagery that looks real enough to create confusion for voters.

The ability to deceive from AI has put the problem of mis- and disinformation on steroids

Audio content, in particular, can be even more manipulative because the technology for video isn’t as advanced yet and recipients of AI-generated calls lose some of the contextual clues that something is fake that they might find in a deepfake video. Experts also fear that AI-generated calls will mimic the voices of people a caller knows in real life, which has the potential for a bigger influence on the recipient because the caller would seem like someone they know and trust. Commonly called the “grandparent” scam, callers can now use AI to clone a loved one’s voice to trick the target into sending money. That could theoretically be applied to politics and elections.

“It could come from your family member or your neighbor and it would sound exactly like them,” Gilbert said. “The ability to deceive from AI has put the problem of mis- and disinformation on steroids.”

In Slovakia, fake audio recordings may have swayed an election in what serves as a “frightening harbinger of the sort of interference the United States will likely experience during the 2024 presidential election”, CNN reported. In Indonesia, an AI-generated avatar of a military commander helped rebrand the country’s defense minister as a “chubby-cheeked” man who “makes Korean-style finger hearts and cradles his beloved cat, Bobby, to the delight of Gen Z voters”, Reuters reported. In India, AI versions of dead politicians have been brought back to compliment elected officials, according to Al Jazeera.

There are less misleading uses of the technology to underscore a message, like the recent creation of AI audio calls using the voices of kids killed in mass shootings aimed at swaying lawmakers to act on gun violence. Some political campaigns even use AI to show alternate realities to make their points, like a Republican National Committee ad that used AI to create a fake future if Biden is re-elected. But some AI-generated imagery can seem innocuous at first, like the rampant faked images of people next to carved wooden dog sculptures popping up on Facebook, but then be used to dispatch nefarious content later on.

Cybersecurity and the Wizard of Oz in AI

People wanting to influence elections no longer need to “handcraft artisanal election disinformation”, said Chester Wisniewski, a cybersecurity expert at Sophos. Now, AI tools help dispatch bots that sound like real people more quickly, “with one bot master behind the controls like the guy on the Wizard of Oz”.

Perhaps most concerning, though, is that the advent of AI can make people question whether anything they are seeing is real or not, introducing a heavy dose of doubt at a time when the technologies themselves are still learning how to best mimic reality.

Linking Technology with Politics – Katie Harbath

“There’s a difference between what AI might do and what AI is actually doing,” said Katie Harbath, who formerly worked in policy at Facebook and now writes about the intersection between technology and democracy. People will start to wonder, she said, “what if AI could do all this? Then maybe I shouldn’t be trusting everything that I’m seeing.”

Pact but no ban: Google, Meta, Microsoft and OpenAI

Even without government regulation, companies that manage AI tools have announced and launched plans to limit its potential influence on elections, such as having their chatbots direct people to trusted sources on where to vote and not allowing chatbots that imitate candidates. A recent pact among companies such as Google, Meta, Microsoft and OpenAI includes “reasonable precautions” such as additional labeling of and education about AI-generated political content, though it wouldn’t ban the practice.

But bad actors often flout or skirt around government regulations and limitations put in place by platforms. Think of the “do not call” list: even if you’re on it, you still probably get some spam calls.

[end]

2016: How To Block Trump Spam in Google Analytics

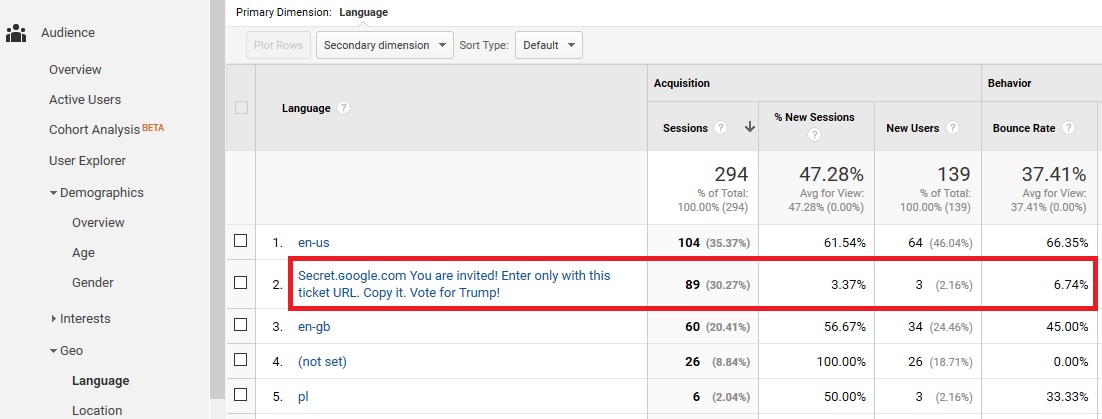

Annoying isn’t it? This new bot from Russia is dirtying Google Analytics with fake hits so your reporting makes you think you are seeing lots of visits to your website but they are fake.

The Trump spam is also targeting legit websites like thenextweb.com, abc.xyz, and techcrunch.com so you think you are getting hits from these notable websites – by implementing this fix you will strip fake hits.

2016 continued: Using Filters at Language Level to Block Trump Spam

Setting up a view-level filters is fairly simple, but it should be noted that this is a permanent change going forward, so do be careful when using it, especially if you have little prior experience with view filters. The filter I propose will filter out any traffic (hits) where the language dimension contains 12 or more symbols. Since most legitimate language settings sent by browsers are 5-6 symbols and rarely is there traffic with 8-9 symbols in this field, it should only filter out language spam.

In addition to that, there are symbols which are invalid for use in the language field, but which can be used to construct a domain name (or what looks like it, such as “secret google com”, “secret,google,com”, “secret!google!com”), so we can exclude those as well.

The resulting regular expression we’ll use looks like this:

You need to construct the “Exclude Language Spam” filter as shown in the screenshot below:

Please note: The above is an updated tip courtesy of Carlos Escalera and his comprehensive blog post on his website blog “How to Prevent and Remove the Spam from your Google Analytics”

Make sure to filter to the “Language Settings” dimension. You need “Edit” access at the “Account” level in Google Analytics in order to set up new filters, so make sure you have that, or you won’t even see the setup.

You can use the “Verify Filter” option to see how it would affect data from the last few days.

‘Verify Filter’ Trump Spam 2016

Remember to filter your IP address too!

Google Analytics Help shows you how and it’s really simple I promise!

Create an IP address filter

To prevent internal traffic from affecting your data, you can use a filter to filter out traffic by IP address.

You can find the public IP address you are currently using by using the “what is my ip address” Whats My IP web checker. You can find out what IP addresses and subnets your company uses by asking your network administrator.

To create an IP address filter:

- Follow the instructions to create a new filter for your view.

- Leave the Filter Type as

Predefined. - Click the Select filter type drop-down menu and select

Exclude. - Click the Select source or destination drop-down menu and select traffic from the IP addresses.

- Click the Select expression drop-down menu and select the appropriate expression.

- Enter the IP address or a regular expression.

Originally Published on: 28 November 2016 at 18:00